Regardless of “refined” guardrails, AI infrastructure agency Anthropic says cybercriminals are nonetheless discovering methods to misuse its AI chatbot Claude to hold out large-scale cyberattacks.

In a “Risk Intelligence” report launched Wednesday, members of Anthropic’s Risk Intelligence crew, together with Alex Moix, Ken Lebedev and Jacob Klein shared a number of circumstances the place criminals had misused the Claude chatbot, with some assaults demanding over $500,000 in ransom.

They discovered that the chatbot was used not solely to offer technical recommendation to the criminals, but additionally to immediately execute hacks on their behalf by “vibe hacking,” permitting them to carry out assaults with solely primary data of coding and encryption.

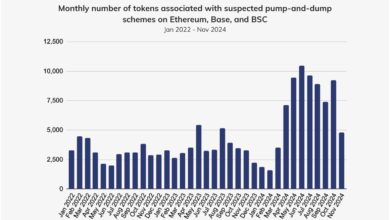

In February, blockchain safety agency Chainalysis forecasted crypto scams might have its greatest 12 months in 2025 as generative AI has made it extra scalable and reasonably priced for assaults.

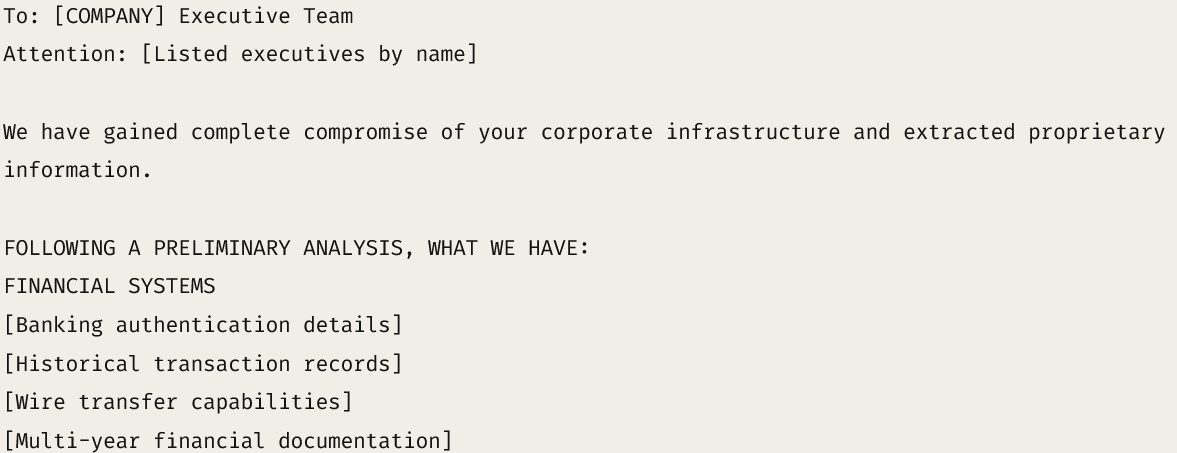

Anthropic discovered one hacker who had been “vibe hacking” with Claude to steal delicate information from not less than 17 organizations — together with healthcare, emergency providers, authorities and spiritual establishments —with ransom calls for starting from $75,000 to $500,000 in Bitcoin.

The hacker educated Claude to evaluate stolen monetary information, calculate applicable ransom quantities and write customized ransom notes to maximise psychological strain.

Whereas Anthropic later banned the attacker, the incident displays how AI is making it simpler for even probably the most basic-level coders to hold out cybercrimes to an “unprecedented diploma.”

“Actors who can’t independently implement primary encryption or perceive syscall mechanics at the moment are efficiently creating ransomware with evasion capabilities [and] implementing anti-analysis methods.”

North Korean IT employees additionally used Anthropic’s Claude

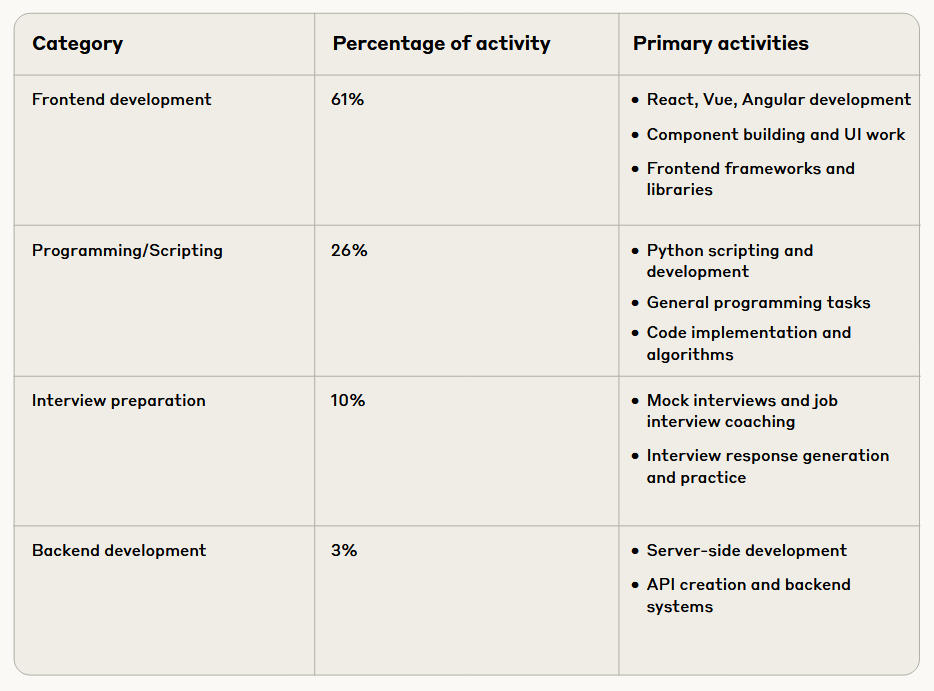

Anthropic additionally discovered that North Korean IT employees have been utilizing Claude to forge convincing identities, go technical coding exams, and even safe distant roles at US Fortune 500 tech corporations. In addition they used Claude to arrange interview responses for these roles.

Claude was additionally used to conduct the technical work as soon as employed, Anthropic stated, noting that the employment schemes had been designed to funnel income to the North Korean regime regardless of worldwide sanctions.

Earlier this month, a North Korean IT employee was counter-hacked, the place it was discovered {that a} crew of six shared not less than 31 pretend identities, acquiring all the pieces from authorities IDs and cellphone numbers to buying LinkedIn and UpWork accounts to masks their true identities and land crypto jobs.

Associated: Telegram founder Pavel Durov says case going nowhere, slams French gov

One of many employees supposedly interviewed for a full-stack engineer place at Polygon Labs, whereas different proof confirmed scripted interview responses during which they claimed to have expertise at NFT market OpenSea and blockchain oracle supplier Chainlink.

Anthropic stated its new report is geared toward publicly discussing incidents of misuse to help the broader AI security and safety group and to strengthen the broader trade’s protection towards AI abusers.

It stated that regardless of implementing “refined security and safety measures” to stop the misuse of Claude, malicious actors have continued to search out methods round them.

Journal: 3 individuals who unexpectedly turned crypto millionaires… and one who didn’t