There’s a well-known discomfort creeping in once more, one thing I felt within the early 2010s as I watched social media’s guarantees of connection and group unravel into mass manipulation.

Fb and propaganda bots have been the primary dominoes. Cambridge Analytica, Brexit, world elections, all of it felt like a betrayal of the web’s authentic dream.

Now, within the 2020s, I’m watching the identical forces circle one thing much more unstable: synthetic superintelligence.

This time, the stakes are terminal.

Earlier than we dive in, I have to be clear: once I say ‘open’ vs ‘closed’ AI, I imply open-sourced AI, which is free and open to each citizen of Earth, vs. closed-sourced AI, which is managed and educated by company entities.

The corporate, OpenAI, makes the comparability complicated on condition that it has closed-sourced AI fashions (with a plan to launch an open-source model sooner or later) however is disputably not a company entity.

That mentioned, OpenAI’s chief govt, Sam Altman, declared in January that his crew is “now assured we all know find out how to construct AGI” and is already shifting its focus towards full-blown superintelligence.

[AGI is artificial general intelligence (AI that can do anything humans can), and superintelligent AI refers to an artificial intelligence that surpasses the combined intellectual capabilities of humanity, excelling across all domains of thought and problem-solving.]

One other individual specializing in frontier AI, Elon Musk, talking throughout an April 2024 livestream, predicted that AI “will most likely be smarter than anyone human across the finish of [2025].”

The engineers charting the course at the moment are speaking in months, not many years, a sign that the fuse is burning quick.

On the coronary heart of the controversy is a stress I really feel deep in my intestine, between two values I maintain with conviction: decentralization and survival.

On one facet is the open-source ethos. The concept that no firm, no authorities, no unelected committee of technocrats ought to management the cognitive structure of our future.

The concept that data needs to be free. That intelligence, like Bitcoin, just like the Internet earlier than it, needs to be a commons, not a black field within the fingers of empire.

On the opposite facet is the uncomfortable fact: open entry to superintelligent methods might kill us all.

Who Will get to Construct God?

Decentralize and Die. Centralize and Die. Select Your Apocalypse.

It sounds dramatic, however stroll the logic ahead. If we do handle to create superintelligent AI, fashions orders of magnitude extra succesful than GPT-4o, Grok 3, or Claude 3.7, then whoever interacts with that system does greater than merely use it; they form it. The mannequin turns into a mirror, educated not simply on the corpus of human textual content however on reside human interplay.

And never all people need the identical factor.

Give an aligned AGI to a local weather scientist or a cooperative of educators, and also you would possibly get planetary restore, common training, or artificial empathy.

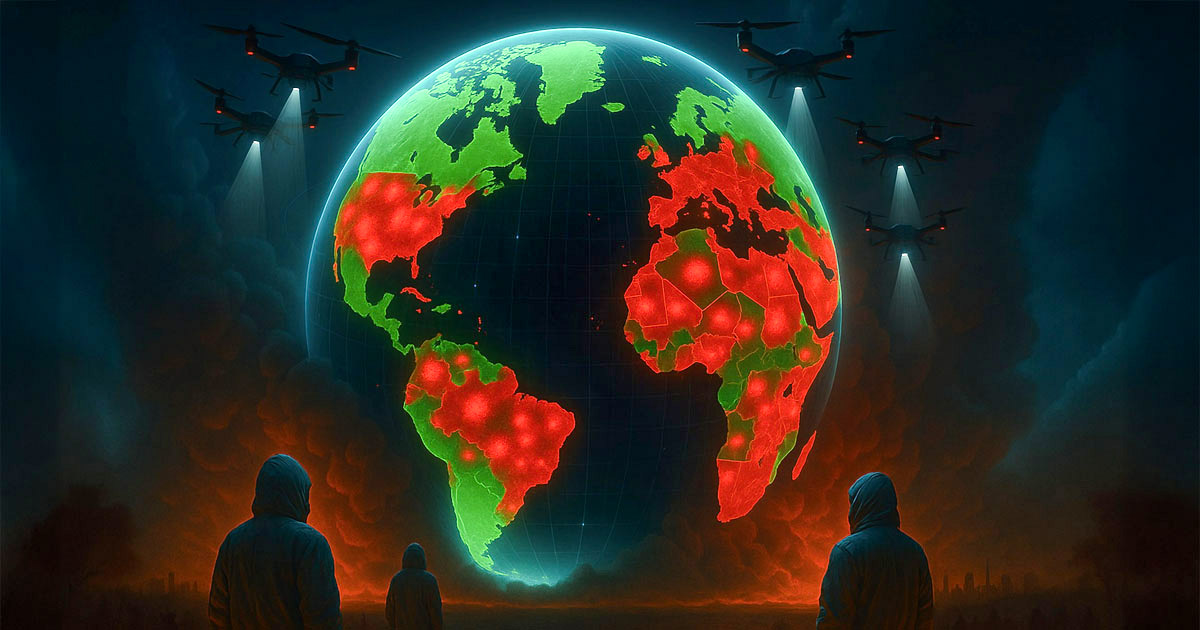

Give that very same mannequin to a fascist motion, a nihilist biohacker, or a rogue nation-state, and also you get engineered pandemics, drone swarms, or recursive propaganda loops that fracture actuality past restore.

Superintelligent AI makes us smarter, but it surely additionally makes us exponentially extra highly effective. And energy with out collective knowledge is traditionally catastrophic.

It sharpens our minds and amplifies our attain, but it surely doesn’t assure we all know what to do with both.

But the choice, locking this expertise behind company firewalls and regulatory silos, results in a special dystopia. A world the place cognition itself turns into proprietary. The place the logic fashions that govern society are formed by revenue incentives, not human want. The place governments use closed AGI as surveillance engines, and residents are fed state-approved hallucinations.

In different phrases: select your nightmare.

Open methods result in chaos. Closed methods result in management. And each, if left unchecked, result in warfare.

That warfare received’t begin with bullets. It should start with competing intelligences, some open-source, some company, some state-sponsored, every evolving towards completely different targets, formed by the total spectrum of human intent.

We’ll get a decentralized AGI educated by peace activists and open-source biohackers. A nationalistic AGI consumed isolationist doctrine. A company AGI tuned to maximise quarterly returns at any value.

These methods received’t merely disagree. They’ll battle, at first in code, then in commerce, then in kinetic house.

I imagine in decentralization. I imagine it’s one of many solely paths out of late-stage surveillance capitalism. However the decentralization of energy solely works when there’s a shared substrate of belief, of alignment, of guidelines that may’t be rewritten on a whim.

Bitcoin labored as a result of it decentralized shortage and fact on the identical time. However superintelligence doesn’t map to shortage, it maps to cognition, to intent, to ethics. We don’t but have a consensus protocol for that.

The work we have to do.

We have to construct open methods, however they should be open inside constraints. Not dumb firehoses of infinite potential, however guarded methods with cryptographic guardrails. Altruism baked into the weights. Non-negotiable ethical structure. A sandbox that enables for evolution with out annihilation.

[Weights are the foundational parameters of an AI model, engraved with the biases, values, and incentives of its creators. If we want AI to evolve safely, those weights must encode not only intelligence, but intent. A sandbox is meaningless if the sand is laced with dynamite.]

We’d like multi-agent ecosystems the place intelligences argue and negotiate, like a parliament of minds, not a singular god-entity that bends the world to at least one agenda. Decentralization shouldn’t imply chaos. It ought to imply plurality, transparency, and consent.

And we want governance, not top-down management, however protocol-level accountability. Consider it as an AI Geneva Conference. A cryptographically auditable framework for the way intelligence interacts with the world. Not a legislation. A layer.

I don’t have all of the solutions. Nobody does. That’s why this issues now, earlier than the structure calcifies. Earlier than energy centralizes or fragments irrevocably.

We’re not merely constructing machines that suppose. The neatest minds in tech are constructing the context wherein considering itself will evolve. And if one thing like consciousness ever emerges in these methods, it can replicate us, our flaws, our fears, our philosophies. Like a toddler. Like a god. Like each.

That’s the paradox. We should decentralize to keep away from domination. However in doing so, we danger destruction. The trail ahead should thread this needle, not by slowing down, however by designing correctly and collectively.

The long run is already whispering. And it’s asking a easy query:

Who will get to form the thoughts of the following intelligence?

If the reply is “everybody,” then we’d higher imply it, ethically, structurally, and with a survivable plan.